Studia

Stories about my recording and mixing process in the home studio.

Zetalot 2020 (Alexander Dove, 2017)

I let this song lie a little while before posting about it, because I somewhat foolishly released it not that long before Christmas when I was about to get pretty busy and distracted – as were most potential listeners. It’s a simple, folky, somewhat stupid little song about a semi-professional Hearthstone player who plays under the alias Zetalot. (Hearthstone: a popular digital card game set in the universe of the Warcraft games that I grew up on.) I wrote the song on the plane back from a weekend in New York and had it fully recorded and mixed a month later, and the thrill of working that efficiently was a big part of what made me want to release it almost immediately (when saving it for January might have been more savvy.)

I recorded the song starting with the guitar, and from there into a “scratch” vocal – i.e. something to help follow the parts of the song but intended to be replaced with a better vocal take (or composite of the best parts of several takes) later. However, I ultimately liked the initial recording and kept it. I increasingly think that 2-3 takes should almost always be enough for vocals, if the performance decisions (how this or that line should be sung) are already made. This isn’t really counting flubs or false starts, partial takes where all the lyrics completely vanished from your mind, etc. But two solid takes will probably contain a good enough rendition of every line or section, and if it’s not the case you can go in and get another take of just that troublesome spot. And as long as I’m pontificating and pretending to know jack shit: I think vocal comping (compositing) should be done at the largest feasible level of structure – meaning, take the best take of an entire verse or chorus, or at least the best take of a complete line, rather than the syllable-by-syllable painstaking comping that seems to be so prevalent in the modern music industry.

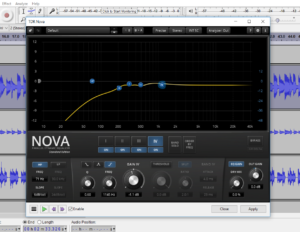

Speaking of austere principles I’ve stolen from better and more experienced engineers, I completely went against one in this song, by indulging in a bit of utter fix-it-in-the-mixery. If that sounds opaque to you: there is a general precept among audio engineers, averred by all and adhered to by almost none, that you should, whenever possible, record things the way you want them to sound, rather than capture something problematic and say, “we’ll fix it in the mix.” I broke this rule when it came to the bass in this song, which I played in an enthusiastic way that felt extremely satisfying but which left me with a recorded sound that had a huge, vaguely flatulent attack to every note, way out of proportion to the sustaining tone. This was particularly true on the choruses and intro – I think I didn’t rerecord it mostly because I liked the sound on the verses, where I’d played softer. I ended up wrestling with the bass tracks quite a bit trying to tamp this down, with compressors with different attack settings (mostly short to try and push down this obnoxious transient), dynamic EQ (TDR Nova) and even a tape emulation (Variety of Sound FerricTDS) on the bass bus.

These are the various fancy plugins I was calling into service on my bass bus (there were still others on the two individual bass tracks).

So that part was a slight misadventure, but my overall experience was super fun. After the guitar and vocals (which were recorded with my Rode NTK tube condenser) my main approach was simply: pick different microphones for different instruments, stick them in front of me, and record the part. I think I used a Line Audio CM3 on the baritone ukulele and my sE 2200a II on the soprano uke – unless that was also the CM3. I need to take notes. I definitely used the 2200a II on tambourine, in figure-8 mode. The last thing I recorded was my self-harmony on the second and third choruses, which I also don’t remember the mic used on. In any case, the recording generally went well, even if I didn’t take notes and screwed up the bass and had to fix it. And besides, Zetalot liked it:

@alexanderdove wrote and recorded a song about my dedication to Priest class, check it out guys, it’s awesome PogChamp

https://t.co/kGwUmuRqGZ— SK Zetalot (@Zetalot2) January 3, 2018

Goat (Sea Piglet, 2017)

This song is about the most fun I’ve ever had. This is another track by my good friend Beki, under the name of Sea Piglet. In addition to recording and mixing, I played cajon, bass, and other percussion, as well as contributing some … distinctive sounds toward the end. The goals with recording this were firstly to capture the energy of the song when we all play it together; secondly to get a feeling of ’90s rock on the grunge/punk/garage-band spectrum of things (the song was inspired partly by a Pixies song); and thirdly to have a great time throughout the process and hope that could come through in the recording.

The somewhat ridiculous, janky, and potentially misguided way we recorded:

In the same small room (the former bedroom where I have my studio set up) we had my brother Iain playing acoustic guitar, Beki singing vocals, and me playing cajon, all simultaneously; the guitar was into a cheap small-diaphragm condenser (Behringer C-2) while the vocals and cajon were both just into all-purpose Shure SM57s. These mics went into my old Mackie mixer (although the vocal mic went first into my dbx 286A) and were panned hard left (guitar mic), center (vocal mic), and hard right (cajon mic.) From there the mixer outputs went into the line inputs of my Focusrite 2i4 interface.

This is my workaround for using three or more microphones with only two inputs (just as I did on Lavender Earl Gray.) However, once in the computer, I started having a lot of trouble using Audacity to separate the three elements (left, center, and right). It seemed that the mid-side plugins I was using (such as Variety of Sound’s Rescue), or their interaction with Audacity, or… possibly… just the nature of mid-side processing in the first place… meant that I couldn’t truly separate the three components. However – once I bailed on Audacity and finally made the call to start using Reaper, I pretty much found that I could at least sort it out into vocals and a couple tracks of instruments, though of course there was not perfect isolation (as there wasn’t meant to be).

A Reaper project!

The end result: vocals with guitar and cajon in them, cajon with guitar and vocals in it, and guitar with cajon and vocals in it. In fact, the better part of the cajon sound (other than the very initial attack) came from the guitar mic. This means that I couldn’t manipulate each element totally independently, but everything I did (for example) to the vocal sound would also affect, to some degree, the overall guitar sound.

One way I got around this was by taking the vocal and sending a copy of that track to a separate bus where I rolled off a lot of low and low-mid frequencies on the bottom, and for good measure the super-high frequencies on the top, so I had just a narrow band that was primarily vocal sound without most of the body of the guitar. That copy of the vocal is what I applied distortion to, and what I sent to the delay effects (echoes.) This is, to put it mildly, much, much easier to do in Reaper than it would have been in Audacity. It is possible in Audacity, mind you, but holy cow is it less convenient. In this project, I auditioned several distortion effects and changed my mind repeatedly about which one to use, after having the delay set up. In Audacity, I would have had to back up, redo several steps, try the new distortion, and then send that to the delays. In Reaper, I could go back and change something like the distortion (or the high-pass filter before the distortion) without having to redo everything further down the chain.

Note: this is also what I always liked about working in Audacity. Because I couldn’t go back and change things after the fact, I had to try my best to get them right the first time, and commit to those choices. A lot of people out there will talk about the desirability of committing to ideas and not endlessly tweaking, but in practice it seems everyone likes the flexibility of infinite fidgeting.

The SansAmp RBI is great for getting bass amp tones without an amp. Could probably use digital amp emulators, but having real knobs to turn makes me feel special.

After that initial session where we got guitar, cajon, and vocals, we had a separate session of goat noises with myself, Iain, and our friend Cara, and separately I recorded bass (two layers, one clean and one distorted, through the SansAmp RBI by Tech 21) as well as tambourine and ride cymbal. I did those percussion elements with one of my newer mics, the sE 2200A II, which is fun for experimentation because it has multiple polar patterns – it can capture sound in all directions equally (omni), from the front and back (figure-8), or just in front (cardioid). I ended up using it in figure-8 for the tambourine and in cardioid for the ride cymbal, although I tried it in omni as well, and it seemed like that would sound great for a softer or jazzier context. It was amusing to me (possibly just because I’m easily amused) that these minor percussion parts were getting recorded with a nicer, more expensive mic than any of the main parts of the song, since the lead vocals were recorded through a standard old SM57.

I’ll stop rambling on now. Go forth and goat.

Lavender Earl Gray (Alexander Dove, 2017)

This song is the B-side to Lonesome George, a single I put out at the end of July. I think arguably the single itself has some production / mixing mistakes that I hope to improve on in the eventual album version – it’s all a learning process, after all. This B-side, however, was a lot of fun and involved a lot of embracing happy accidents.

First of all, the distorted, almost 8-bit sounding bits at the beginning and end are from my first attempt at recording the guitar, when my Focusrite Scarlett 2i4 interface was having an error that it would often have, where audio recorded through it was crushed in this way until I unplugged and reconnected it. This seems to be something that happens when these interfaces are used just for input and something else (in that case, my laptop’s built-in soundcard) is used for output. Ever since I’ve had my speakers connected to the Focusrite, there’s been no problem. In this particular instance, I was tickled by the distorted audio and felt the need to preserve some of it for the intro and outro.

I ended up not recording the song until much later, and recorded guitar and vocals together with a somewhat silly three-mic setup, with two ribbon mics (Cascade Fatheads) in stereo around the guitar, and my Rode NTK in front my face for vocals. Ribbon mics always record in a figure-8 pattern, capturing sound in two directions and rejecting sound from the sides – I pointed the sides of the Fatheads towards my face to try and keep the main vocal sound out of the guitar, while they would inevitably catch some of the echoes from the room.

All three mics were going into my old Mackie mixer, with the ribbons hard left and right and the vocal mic panned center. The stereo outputs went into the Focusrite to record a stereo track into Audacity. The idea of recording this way occurred to me partly because I’d recently discovered the excellent Variety of Sound plugins such as Density. These plugins have the capability for “Mid/Side” processing, aka distinguishing between the information that overlaps between the stereo channels (the mid) and the information that differs between them (the sides) in order to process them separately. This means I could rebalance between the middle mic (mostly vocals) and the sides (guitar and room sound) and treat them differently with EQ, compression, and saturation.

Density by Variety of Sound

The other happy accident came when two of my two takes magically turned out incredibly close together in timing, so much so that I was able to use the slightly weaker take as a kind of jerry-rigged slap delay (the slight echo effect you can hear on the lead vocals and particularly the more percussive guitar strums). I took the alternate take, compressed it pretty hard, rolled off the high and low frequencies, and placed it slightly after the main take with an amount of delay that I found to have a fun rhythmic energy to it. Because the “delay” is actually another organic performance, there is a slight ebb and flow in the amount of delay that feels (to my ear) less robotic while still not sounding like anything found in nature.

What more needs to be said? It’s a silly little song, and I wanted to keep the feeling of a rough bedroom recording. A couple happy accidents and some playing around made the rest of the track. Oh, and thanks to my friend SJ for sharing the tea, and Jeremiah for the mug, that made the song exist in the first place.

I Don’t Mind (Sea Piglet, 2017)

Hi! Welcome to Studia, my third soon-to-be defunct blog on this site. In this one, I will write a little bit about the recording and mixing of each song I work on after it’s gone out into the world, whether it’s my own solo thing, a friend’s music I’ve recorded/”produced,” or something from my Scottish/Irish band, Dòrain. Hopefully it’ll be a narrative of continuous learning and improvement, since I’ve only just this year gotten over ten years of saying, “I can’t learn how to do this – I don’t understand technical things – I don’t have ‘that kind of brain.'” Now I’m trying to learn everything I can while putting it into practice making the best music I can.

This is a song by my friend Beki, who records under the name Sea Piglet. She’s become a prolific songwriter out of almost nowhere, and I’ve been having a lot of fun recording her songs; this one was recorded in my little bedroom studio when my recording stuff was all crowded into a small bedroom with all my other worldly possessions.

We recorded the piano into Audacity (I use Audacity instead of a proper DAW; more on that later, maybe) with the two audio outputs from my roommate’s piano going to the two inputs of my Focusrite 2i4 interface. I have a Thing about MIDI that I should probably get over at some point, so right now I always just record the audio from the keyboard. Fortunately, my roommate’s keyboard sounds really good. After that we recorded Beki’s vocal with my best available mic, my Rode NTK tube condenser. I’m not sure this is the best mic for Beki’s voice (though her voice sounds fantastic no matter what.) I tried a different, non-tube condenser (borrowed from my roommate) on her recently and I think I liked it better.

Free VST plugins like TDR Nova make Audacity a lot more powerful.

After that, Beki left and I was left to my own devices to flesh the song out. My brother added ukulele (recorded with the Rode) and I added bass, guitars and shakers. I own and attempt to play a beautiful acoustic-electric bass guitar that has a pickup but also a lovely acoustic sound to it, so the way I usually try to capture it is a two-pronged approach. I plug the bass into the Focusrite interface to record a DI, but then also put the Rode mic on it to capture the acoustic sound, string noise and whatnot. I split the DI part into two parts, one low-passed to be just the low frequencies, the other high-passed to be everything else; the mic signal is also high-passed so the lows from the DI stand alone. (During mixing I decide if I want to use the higher part of the DI, or just the mic sound and the lows.) On some songs I do just the DI, when I want less of an acoustic bass sound, but for most of Sea Piglet’s songs I want that feel.

For the guitars and shakers I just recorded through an unassuming SM57, which I felt clever about (for the guitars). I had nicer mics available, but I didn’t really want the guitar to shine and sparkle and cut through, but just unobtrusively fill things out. I ended up pretty happy with the choice.

I won’t say much about the mixing, I think – it’s safe to say that I used the TDR Kotelnikov compressor and/or the TDR Nova dynamic EQ on pretty much everything, because those plugins are so versatile and high-quality. The one thing is obviously worth commenting on – I had the silly idea to copy and paste Beki’s singing from the very end to the intro, and make that section sound like an old record or radio transmission. I put the vocals through an aggressive high-pass and low-pass filter (the “telephone effect”) and compressed it like hell, then ran the whole section through iZotope Vinyl, a free plugin from a company that also makes quite expensive plugins, which makes things sound like an old record – you have a dial to pick the era of records you’re imitating, and sliders for factors like wear, dust, and scratches. The amusing thing is that iZotope also sells the tools to get rid of all those types of artifacts – of course the company that specializes in audio cleanup/restoration also knows how to put all the dirt and wear back in. Putting it in is so much easier than taking it out that they don’t even charge for it.

IZotope Vinyl for the “old record” feel.

Well – we’ll call that a blog post. I’m not 100% sure what the audience for this is meant to be, and if I should explain all the things/equipment/techniques I mentioned in passing up there. Let me know, maybe?